According to a recent report by Fortune Business Insight, the global cybersecurity market will grow from 112 billion in 2019 to over280 billion by 2027. Most technical leaders know that security is a very crowded market with many product categories and specialties. Over the last 20 years, Machine Learning (ML) techniques have played a vital role in helping to shape prediction and intelligence into cybersecurity capabilities. Many systems today are smart enough to separate background noise from real security threats. Using specialized forms of unsupervised learning to train adaptive algorithms, we have been able to impede zero-day attacks. Machine learning has helped the industry through the automated curation of the millions of data points coming from research documents, posts, articles, and alert mechanisms from all over the world. Despite the technological progress and substantial financial investment, breaches are on the increase. Cognizant recently suffered the Maze Ransomware attack last Friday. The IOCs (Indicator of Compromise) included IP addresses of servers hosting kepstl32.dll, memes.tmp, and maze.dll files. Many of these types of ransomware exploits may exist within an enterprise undetected. They move laterally across systems to capture credentials and data files.

Cyber-attacks are the last thing hospitals need right now.

The healthcare sector is especially vulnerable because many attractive target attributes coalesce under one roof – identity theft, financial breach, biomedical device hacking, ransomware on mission-critical operations, legacy system patching, and reactive processes. A recent Homeland Security article by the DHS Intelligence Enterprise (DHS IE) highlighted that cyber targeting of the US public health and healthcare sector is likely to increase during the COVID19 pandemic. The report details various advanced persistent threats (APT) against the World Health Organization, Department of Health and Human Services, and the Centers for Disease Control along with regional and state healthcare providers.

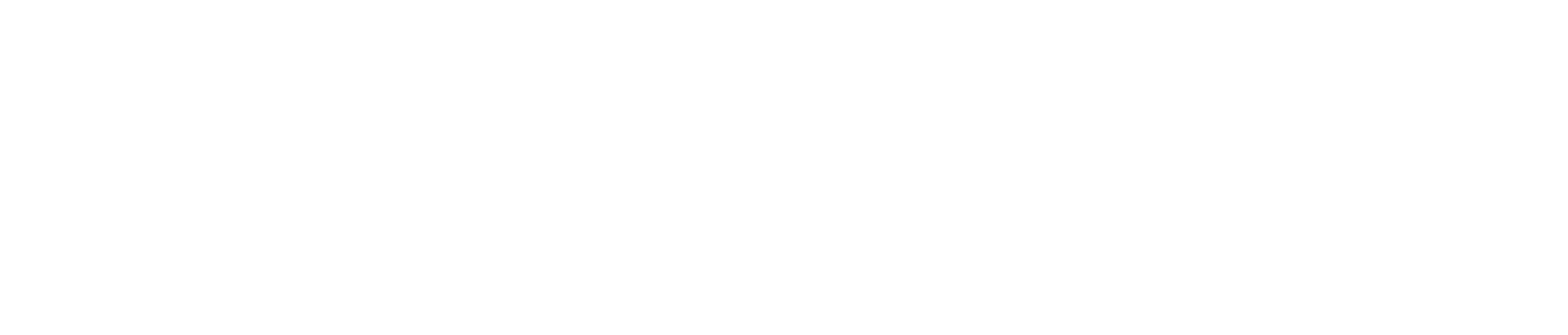

The table below summarizes just a few examples of where machine learning has already been hard at work in contemporary cybersecurity applications.

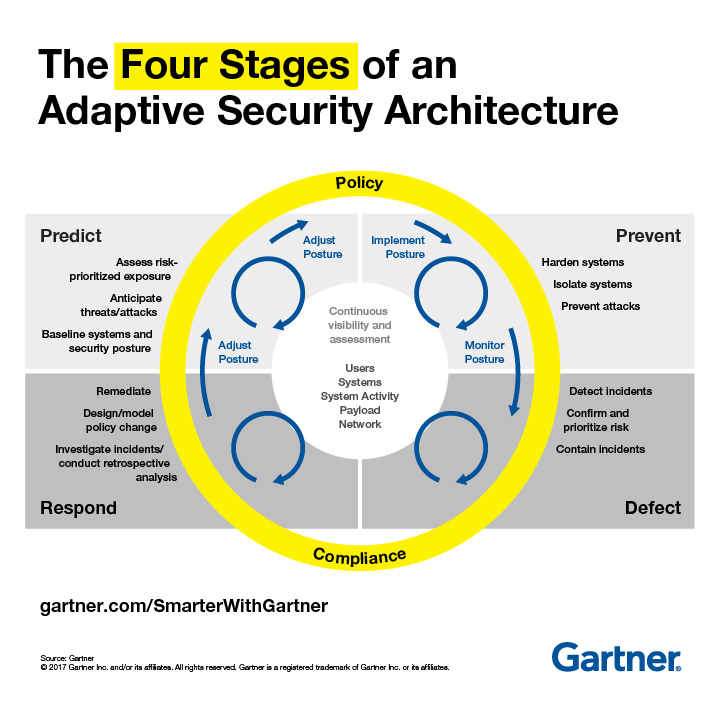

There have been a few constructs to show the key things machine learning can do. We call these capability frameworks. Most of them describe the evolution of capability, as shown below:

I want to contrast this machine learning capability framework with a generally accepted security management approach, such as Gartner’s Adaptive Security Architecture (below; note the diagram from Garter in the lower right corner should say “Detect.”). There are many security solutions on the market that tend to demonstrate compliance with Gartner’s PPDR model. So far, so good. However, as you can see, the entry point is Prediction. Given a set of circumstances, scenarios, and anomalies, machine learning algorithms in these systems make informed alerts or predictions based on their training. The detection mechanism operates similarly. Many of the systems can perform continuous learning in real-time.

I am amazed by how many security firms want to layer in the benefits of utilizing trained human security analysts to incorporate qualitative factors designed to improve effectiveness. Using more humans goes the other way where machine learning intends to go.

In threat intelligence, numerous external databases such as the National Vulnerability Database (NVD) are used with automation to arrive at a CVSSv3 (Common Vulnerability Scoring System) score, for example. Insider threat detection is one area using more advanced forms of machine learning, such as Deep Neural Networks (DNNs) and Recurrent Neural Networks (RNNs). The algorithm training enables it to recognize activity that is characteristic of an active user and can concurrently determine whether their behavior is normal or abnormal – all in real-time. The cybersecurity machine is doing an excellent job of Perceiving and Understanding based on ML capability frameworks. However, only by Performing actions can the machine determine if it succeeds or fails, thereby enabling it to Learn more quickly. A failure would be a false positive of a security event.

Cybersecurity applications need to self-learn automatically.

In cybersecurity practice, you know what you’re looking for from a classification sense, and you know how to detect outliers or anomalies from unsupervised learning systems. But – you don’t know what you can’t see because you’re looking for specific things based on training, not certainly collecting the many data points that can help your machine truly Perceive its environment. We are training cybersecurity systems to learn first based on what we feed it; we’re just not training it to Learn for itself at a more rapid pace. The same concept applies to autonomous driving. If you had to program every use case of traffic, roads, signs, languages, weather, vehicles, and laws, you would not be able to put enough humans to work labeling all those training datasets.

We need to make our cybersecurity systems to become smarter by themselves with as little human intervention as possible. If a System can only respond to what it detects, it may not know how to respond to something new it has never seen before. A human can always take the wheel of a self-driving car, and a security analyst can do the same. We need more machine-assisted analysts using self-learning cyber tools.