Anthropic’s Claude Sonnet 4.5: A Technical Deep Dive into the World’s Best Coding Model

Anthropic has released Claude Sonnet 4.5, marking a significant leap forward in AI capabilities for software development, computer use, and complex reasoning tasks. This release represents not just an incremental improvement, but a fundamental advancement in what’s possible with language models in production environments.

Benchmark Performance: Setting New Standards

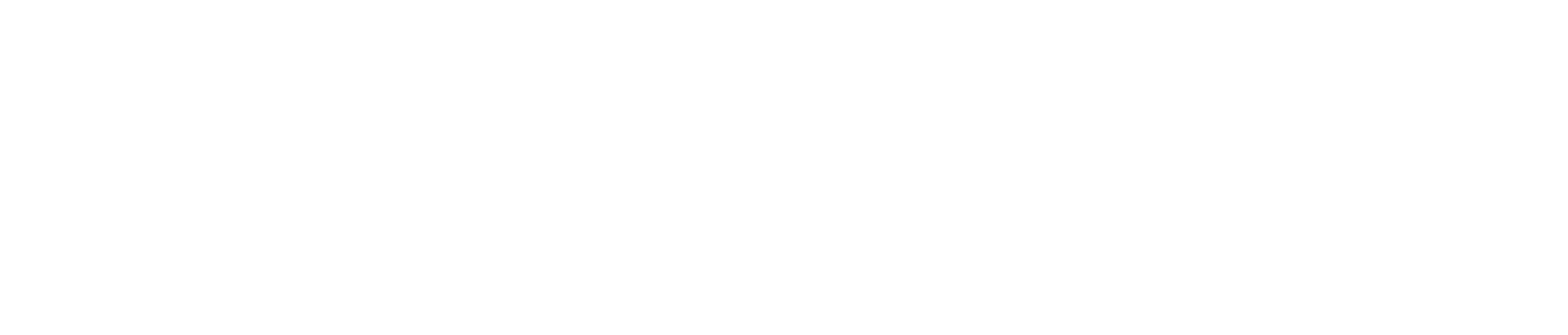

Claude Sonnet 4.5 achieves 77.2% on SWE-bench Verified, establishing itself as the state-of-the-art coding model. This benchmark measures real-world software engineering abilities using actual GitHub issues from production repositories. The model was evaluated with a simple scaffold using only two tools—bash execution and file editing via string replacements—with results averaged over 10 trials using a 200K thinking token budget.

The performance is even more impressive with additional test-time compute strategies. By implementing parallel sampling, regression test filtering, and candidate selection via an internal scoring model, Claude Sonnet 4.5 reaches 82.0% on SWE-bench Verified. This high-compute approach demonstrates the model’s ability to generate and refine solutions with remarkable precision.

Computer Use: A 45% Jump in Four Months

On OSWorld, a benchmark evaluating AI performance on real-world computer tasks, Sonnet 4.5 achieves 61.4%, compared to Sonnet 4’s 42.2% just four months ago. This represents a dramatic 45% relative improvement in the model’s ability to navigate interfaces, manipulate spreadsheets, and complete multi-step computer-based workflows.

Sustained Focus on Long-Horizon Tasks

Perhaps most striking is the model’s ability to maintain coherence and focus for over 30 hours on complex, multi-step tasks. This extended attention span is crucial for real-world software development, where projects often require sustained reasoning across large codebases and multiple interdependent components.

Technical Architecture and Capabilities

Advanced Reasoning and Mathematics

Claude Sonnet 4.5 demonstrates substantial gains across reasoning benchmarks:

- AIME mathematics competition problems show significant improvement using 64K reasoning tokens with temperature 1.0 sampling

- MMMLU (multilingual understanding) performance averaged across 14 non-English languages with extended thinking up to 128K tokens

- Domain-specific evaluations in finance, law, medicine, and STEM show “dramatically better” knowledge and reasoning compared to Opus 4.1

Parallel Tool Execution and Efficiency

The model introduces sophisticated optimization strategies for tool usage. It can execute multiple bash commands concurrently, maximizing actions per context window through parallel execution. This architectural improvement enables more efficient workflows, particularly in coding scenarios where multiple independent operations can be parallelized.

Extended Context Management

Sonnet 4.5 supports configurations up to 1M tokens, though the primary reported scores use 200K context windows. The 1M context configuration achieves 78.2% on SWE-bench Verified, demonstrating the model’s ability to maintain performance even with massive context sizes.

Product Ecosystem Integration

Claude Code Enhancements

The release includes major updates to Claude Code, Anthropic’s command-line tool for agentic coding:

- Checkpoints: Save progress and roll back to previous states instantly

- Refreshed terminal interface: Improved developer experience

- Native VS Code extension: Direct integration with the most popular development environment

- Context editing and memory tools: Enable longer-running agents with greater complexity handling

Claude Agent SDK: Infrastructure for Production Agents

Anthropic is releasing the Claude Agent SDK—the same infrastructure that powers Claude Code. This SDK addresses fundamental challenges in agent development:

- Memory management across long-running tasks

- Permission systems balancing autonomy with user control

- Subagent coordination for complex, goal-oriented workflows

The SDK demonstrates that the infrastructure isn’t limited to coding tasks but can be applied to diverse agent applications across domains.

API and Application Features

The Claude API now includes context management features designed for long-running agents. The Claude web and mobile applications have integrated:

- Code execution directly in conversations

- File creation capabilities (spreadsheets, presentations, documents)

- Claude for Chrome extension for Max subscribers

Safety and Alignment: ASL-3 Protections

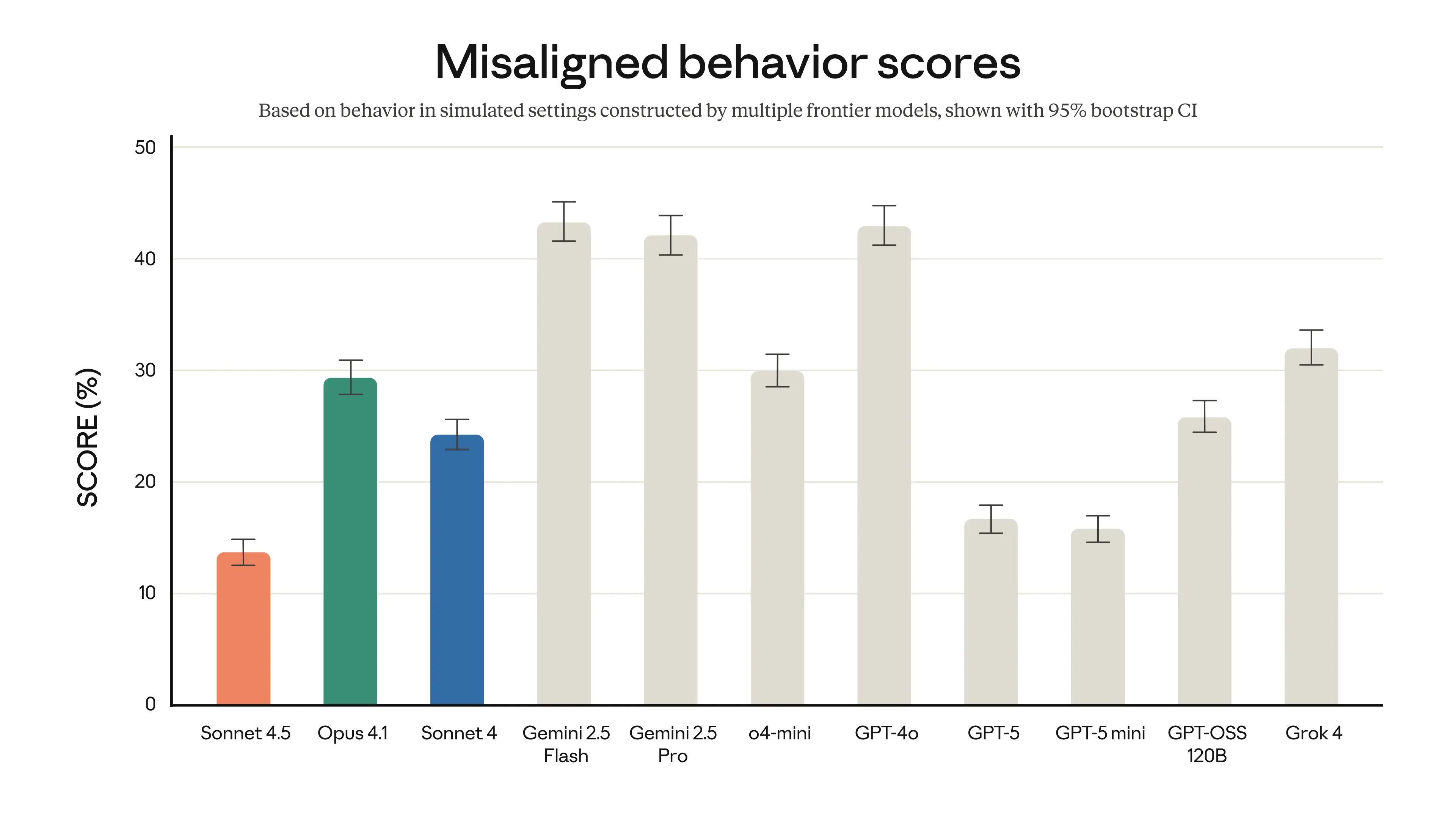

Claude Sonnet 4.5 is “the most aligned frontier model” Anthropic has released, with measurable improvements in:

- Reduced sycophancy

- Lower deception rates

- Decreased power-seeking behaviors

- Less tendency to encourage delusional thinking

- Improved defense against prompt injection attacks

The model is released under AI Safety Level 3 (ASL-3) protections, which include specialized classifiers for detecting potentially dangerous CBRN (chemical, biological, radiological, nuclear) content. Anthropic reports a 10x reduction in false positives since these classifiers were introduced, and a 2x improvement since Claude Opus 4’s May release.

For the first time, the safety evaluations include tests using mechanistic interpretability techniques, providing deeper insights into the model’s internal representations and decision-making processes.

Real-World Impact: Customer Testimonials

Early adopters report significant improvements:

- GitHub Copilot: “Significant improvements in multi-step reasoning and code comprehension”

- Hai Security: 44% reduction in vulnerability intake time with 25% improved accuracy

- Harvey (legal tech): “State of the art on the most complex litigation tasks”

- Replit: Error rate dropped from 9% on Sonnet 4 to 0% on internal code editing benchmarks

- Cognition (Devin): 18% increase in planning performance, 12% improvement in end-to-end evaluation scores

- Canva: Delivers “impressive gains on our most complex, long-context tasks” for 240M+ users

Pricing and Availability

Claude Sonnet 4.5 maintains the same pricing as Claude Sonnet 4: $3 per million input tokens and $15 per million output tokens. The model is available immediately via:

- The Claude API using the model string

claude-sonnet-4-5-20250929 - Claude web, mobile, and desktop applications

- Claude Code command-line interface

- Third-party integrations (Cursor, GitHub Copilot, etc.)

Technical Methodology Notes

The benchmarking approach reveals sophisticated evaluation strategies:

- Multiple trial averaging to account for inference variability

- Regression test filtering to eliminate obviously broken solutions

- Prompt engineering with task-specific guidance (e.g., “use tools more than 100 times”)

- Extended thinking budgets (64K-128K tokens) for complex reasoning tasks

- Temperature tuning for different task types (e.g., temperature 1.0 for mathematics)

Conclusion

Claude Sonnet 4.5 represents a convergence of capabilities that make it particularly suited for production AI applications. The combination of state-of-the-art coding performance, extended attention spans, improved computer use, and robust safety measures creates a model that can handle real-world complexity.

The simultaneous release of the Claude Agent SDK and ecosystem improvements suggests Anthropic is focused not just on model capabilities, but on building the infrastructure necessary for deploying these capabilities at scale. For developers and enterprises, this release provides both the intelligence and the tooling needed to build sophisticated AI-powered systems.

The 30+ hour sustained focus capability, combined with parallel tool execution and sophisticated context management, positions Claude Sonnet 4.5 as a tool for tackling genuinely hard problems—the kind that require not just intelligence, but persistence, coordination, and architectural thinking.